What the heck is dbt artifacts?

You'll know everything about them after reading this post

When you run dbt commands, they generate a significant amount of metadata. This includes storing compiled SQL code of your models without Jinja, compiled code of generic tests, and a DAG pipeline for dbt docs. These artifacts, collectively known as dbt artifacts, can be seen as byproducts of your dbt commands. Each time you run dbt commands, the artifacts are updated to reflect the latest state of your project.

There are various applications of dbt artifacts. One of the most popular example is some dbt packages. These packages rely on dbt artifacts to gather information about your project directly. Another example is data observability, as dbt artifacts provide insights into model runtimes, failed tests, and source freshness information stored conveniently.

“dbt artifacts is a way to make analytics on your analytics”

(thanks to Emily Hawking for this cleverly expressed thought)

In today's post, I will guide you through dbt artifacts that may inspire you to use them in your job, enhancing the efficiency and performance of your dbt project.

What are dbt artifacts exactly?

dbt artifacts are JSON files that are stored in the /target directory whenever you run dbt commands. Each command produces different artifacts.

There are five types of artifacts:

manifest.json – a file that contains all info about your dbt project and its resources (models, tests, macros, etc). Each resource also contains information about configuration and properties.

run_results.json – stores info about a completed dbt invocation, including models timing, test results, number of captured records by dbt snapshots, etc.

catalog.json – created after running dbt docs generate and contains info from data warehouse about generated views and tables (number of rows, column types, etc).

sources.json – produced by dbt source freshness command and contains info about sources and their freshness.

semantic_manifest.json – stores a comprehensive information about dbt Semantic Layer.

Let's quickly dive into each file to discover any interesting findings.

manifest.json

Manifest file is produced by almost every dbt command, excluding the ones that do not work with models, like dbt debug, dbt init, etc.

It represents your dbt project, including models, tests, data sources, macros, exposures, and more. It also includes the relationships between these items, which dbt uses to visualize the DAG (Directed Acyclic Graph).

Interestingly, even if you run only a subset of models (e.g. with --select), all resources will appear in the manifest. However, some properties, like compiled_sql, might be missing for models outside of the selected, since such properties only appear after compiling and executing models.

The manifest is used to render dbt docs and perform state comparison. Having a production manifest is necessary to perform selectors like state:modified.

Since it is a simple JSON file, you can open it with any editor and explore it on your own.

Tip: By default, the file is formatted as a single line, which can make it difficult to read. Use this simple Python command to convert it to a properly formatted and indented view:

python3 -m json.tool manifest.json > manifest_formatted.json

The manifest has several top-level keys for each item type or configuration:

{

"metadata": {...}, # metadata dbt version, run date, etc

"nodes": {...}, # list of models, seeds, snapshots, tests

"sources": {...}, # list of data sources

"macros": {...}, # info macros

"docs": {...}, # doc strings from YAML files

"exposures": {...}, # list of exposures

"metrics": {...}, # dictionary of metrics

"groups": {...}, # dict of groups

"selectors": {...}, # user-defined selectors

"disabled": {...}, # list of disabled models

"parent_map": {...}, # list of first-order parents (for DAG)

"child_map": {...}, # list of first-order child (for DAG)

"group_map": {...}, # mapping of group names to nodes

"semantic_models": {...} # dbt Semantic Models

}By utilizing nodes, sources, exposures, and metrics, you can obtain a comprehensive list of all the resources in the project. By using parent_map and child_map, you can construct a DAG from these resources. Additionally, the docs property allows you to identify which models and tests have documentation. The possibilities are endless!

The manifest is the most basic and commonly used artifact as it contains all the essential information about your dbt project.

run_results.json

The run_results stores the results of a dbt invocation. It only contains the result of the most recent run and does not store the history of all runs.

When you execute the dbt command, such as dbt run, this artifact will store all the information about that run. This includes the full invocation command (with flags and parameters), used selectors, as well as the results and statuses of each model, test, or other resource.

It contains four top-level keys:

{

"metadata": {...}, # dbt metadata

"results": [...], # results for each model in the run

"elapsed_time": 12.34, # elapsed time in seconds

"args": {...} # info about dbt command

}The array of results contains information about each model in the run, limited to the selector you used. It shows the used SQL, timing, name of the final table/view, and the status (success or error). The top-level elapsed_time parameter indicates the total time the command took to execute, from start to finish (in seconds).

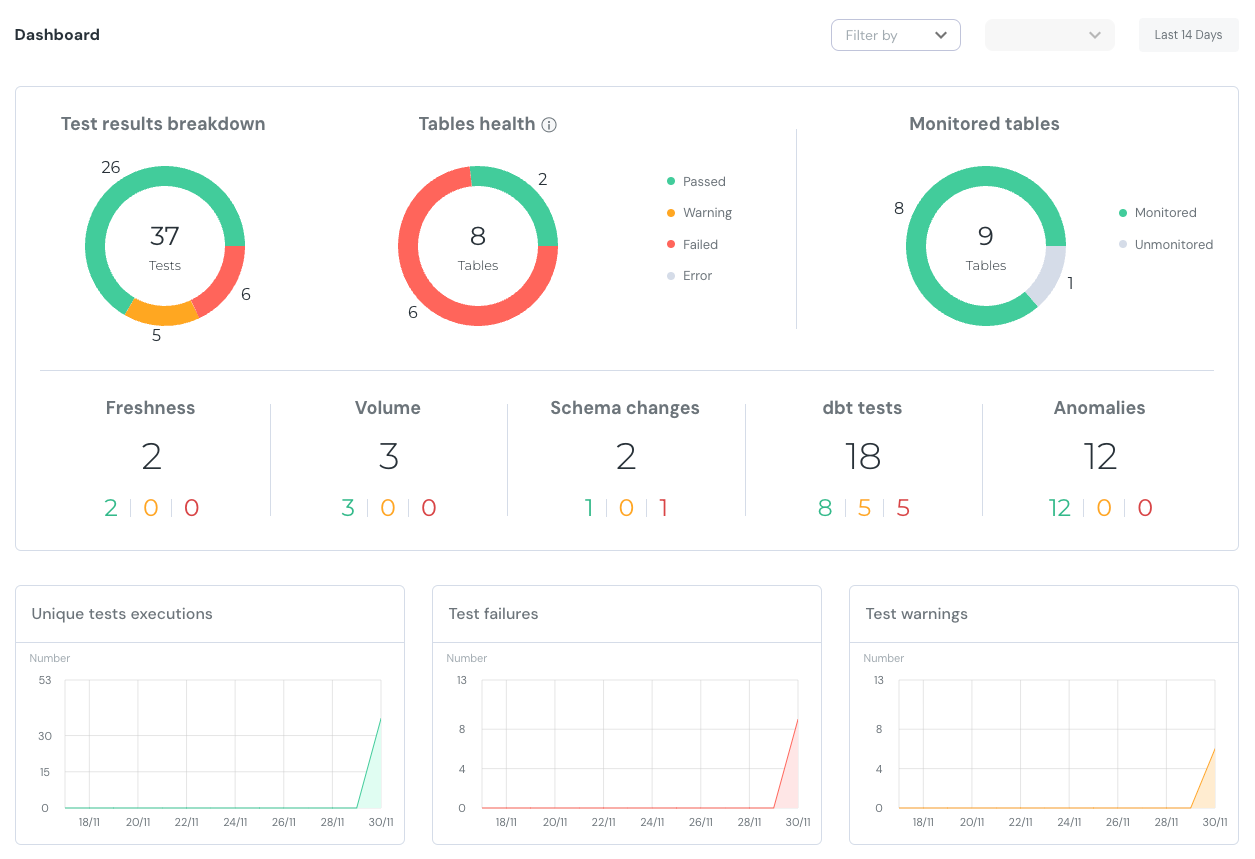

By using run results, you can generate advanced analytics for your dbt project. For instance, consider the elementary dbt package. This package offers data observability capabilities, allowing you to extract statistics from run results artifacts, store them in your data warehouse, and create a comprehensive observability dashboard:

catalog.json

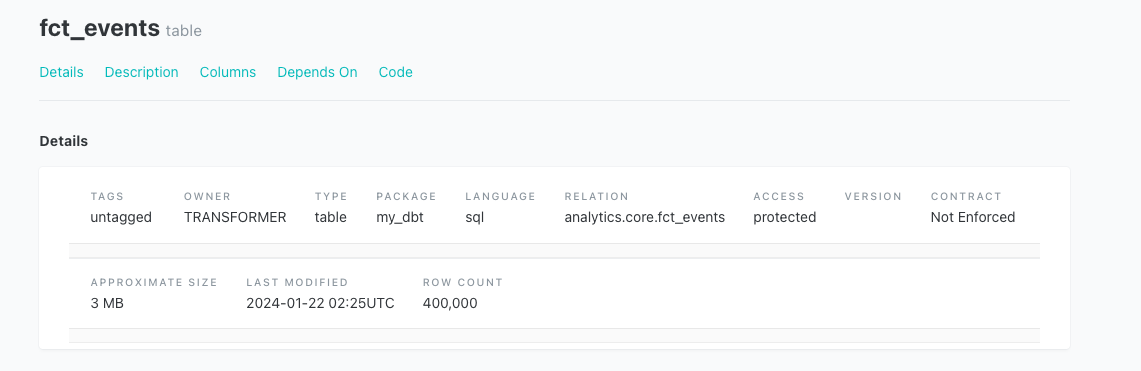

The catalog artifact is generated by running the dbt docs generate command. This file provides information (from data warehouse) about the tables and views created from your dbt models. Essentially, it includes the name of each model in the database, details about the columns and their data types, as well as statistics like the number of rows, table size, and last modified date.

It has 4 top-level keys:

{

"metadata": {...}, # dbt metadata

"nodes": {...}, # list of tables/views for models

"sources": {...}, # list of tables/views for data sources

"errors": null # error for docs generate command

}This artifact can be helpful for checking the percentage of documentation coverage. Also, it allows you to compare the number of columns in the model YAML and in the database, and then verify the existence of documentation using manifest.json. These and many other checks are implemented in the package called dbt_project_evaluator. (Let me know in the comments if you want t detailed tutorial on this package).

sources.json

The sources artifact displays the results of the dbt source freshness command. It records information about the freshness check for each data source with a defined check. This information includes the maximum available timestamp, query execution time, and the status of the check.

The file itself has a simple structure:

{

"metadata": {...}, # dbt metadata

"results": [...], # arrays of freshness checks

"elapsed_time": 1.23 # total execution time

}For example, in dbt Cloud, the source freshness dashboard uses this artifact to display freshness statistics. However, you can build the same dashboard yourself by using the aforementioned elementary package to store the results of the check in the data warehouse.

semantic_manifest.json

This file is a special artifact used by MetricFlow to build metrics for the dbt Semantic Layer. It contains descriptions of the models required to work with the semantic layer. With this file, MetricFlow can understand how to build your metrics, including which models to use and which tables to join.

A semantic manifest is generated every time your project is parsed. This happens when you invoke dbt parse or any of the run, build or compile commands. Please note that the semantic layer with MetricFlow was introduced in v1.6, so you may not have this file in earlier versions.

The file structure is quite simple, with only three top-level keys:

{

"semantic_models": [], # entities, dimensions and measures

"metrics": [], # metrics definitions

"project_configuration": {...} # additional configs

}The use of this artifact outside of Semantic Layer or MetricFlow is somewhat limited. To my knowledge, I haven't come across any interesting applications of this artifact. There are some 3rd-party semantic layers, such as Cube, that have integrations with dbt (check out this link), but these layers do not rely on MetricFlow definitions. Instead, they use their own method to describe metrics. If you know of any cool usage of the semantic manifest artifact, please let me know in the comments!

Final words

dbt artifacts provide a powerful way to gain insights into your dbt project. Essentially, dbt artifacts are simple JSON files that store valuable information about your models, running times, documentation, and more. By leveraging external dbt packages, you can create a comprehensive view of your project to improve its performance and robustness.