There are several ways to run dbt jobs. For example, if you are using Apache Airflow or Dagster, you can do it there. Alternatively, you can choose to use dbt Cloud, which will manage the job execution for you.

However, if you only starting your journey with dbt, there is an alternative way — to run dbt with GitHub Actions. Simply put, Github actions is a CI/CD tool that let’s you run any type of workflow directly from your GitHub repository. It allows you to automate tasks such as running tests, building and deploying applications, and even running dbt jobs.

Let’s dive deeper into running dbt with GitHub Actions!

Basic setup

In this post I’ll show you how to run dbt jobs with Snowflake data warehouse, but it should be easily switchable to other warehouses.

Suppose you have the following setup:

working dbt project in some Github repository

profiles.yml file configured with env variables (will provide example below)

Github Actions allow you to run any arbitrary code on their virtual machines, you only need to setup a workflow and define steps. To setup a workflow create a new file called dbt_prod.yaml inside of .github/workflow folder in your root directory. Inside you need to define a configuration of the workflow and the steps you want to perform.

The minimal workflow to execute dbt run on a schedule may look like this:

name: Daily dbt production run

# CRON job to run dbt at midnight UTC(!) everyday

on:

schedule:

- cron: '0 0 * * *'

# Setting some Env variables to work with profiles.yml

env:

DBT_PROFILE_TARGET: prod

DBT_PROFILE_USER: ${{ secrets.DBT_PROFILE_USER }}

DBT_PROFILE_PASSWORD: ${{ secrets.DBT_PROFILE_PASSWORD }}

jobs:

dbt_run:

name: Scheduled dbt refresh and test

runs-on: ubuntu-latest

timeout-minutes: 90

# Steps of the workflow:

steps:

- name: Checkout

uses: actions/checkout@v3

- name: Setup Python environment

uses: actions/setup-python@v4

with:

python-version: "3.11"

- name: Install dependencies

run: |

python -m pip install --upgrade pip

python -m pip install -r requirements.txt

- name: Install dbt packages

run: dbt deps

# optionally use this parameter

# to set a main directory of dbt project:

# working-directory: ./my_dbt_project

- name: Run the models

run: dbt run

# working-directory: ./my_dbt_project

- name: Run tests

run: dbt test

# working-directory: ./my_dbt_projectThe workflow is quite straightforward if you look closely.

Let's go through each step:

Our workflow will be triggered by a Cron (a scheduler that can execute tasks at specified time).

Since dbt requires credentials to access your database, we define them as secret Github variables. I will show you how to create them shortly.

The main pipeline consists of several steps: installing python dependencies, installing dbt packages, running dbt models, and finally testing them.

For the whole setup to work as expected you also need to have a proper profiles.yml file inside of your dbt project. It may look like this:

default:

outputs:

prod:

type: snowflake

account: "abc12345.us-east-1"

user: "{{ env_var('DBT_PROFILE_USER') }}"

role: "daily_runner"

password: "{{ env_var('DBT_PROFILE_PASSWORD') }}"

database: "analytics"

warehouse: "daily_jobs_xl"

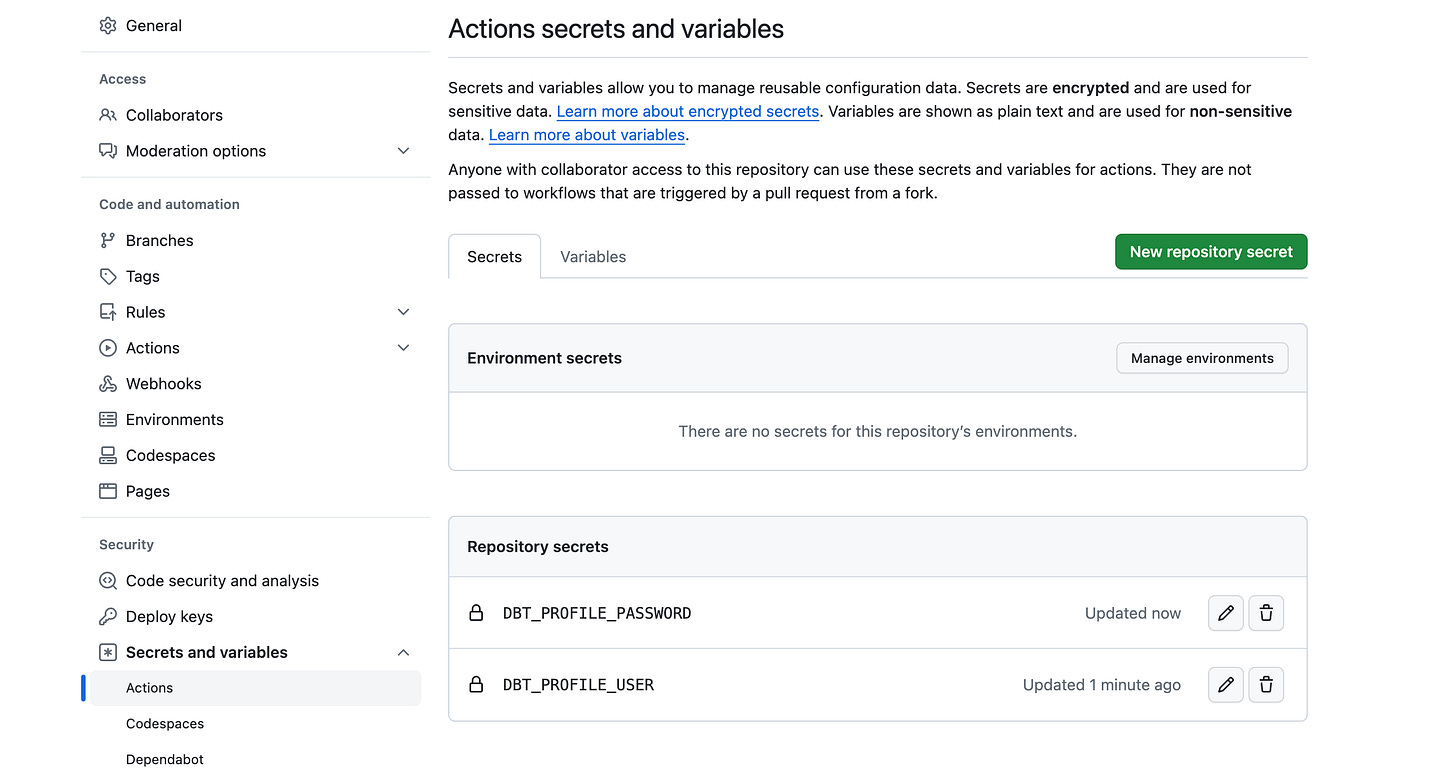

schema: "dbt"As you can see, for username and password we use env variables defined in our workflow. To create those secrets in Github go to Settings → Secrets and variables → Actions and then press “New repository secret”:

Once everything is ready, you can proceed to commit all the changes to the repository. At the specified time, the Cron job will trigger the workflow, which will then start updating your dbt models 🎉!

Want more? Let’s see what else can we add to the workflow.

Adding more steps

Once you have your dbt workflow up and running with Github actions, you can start thinking about making it more advanced by adding additional steps. In this section, I will provide a few examples of how to do so.

Seeds, freshness and docs

If you use dbt seeds in your project you should definitely add this step to your workflow. Just add this step before your dbt run command:

- name: Seed seeds

run: dbt seedNext, if you test your sources for freshness, you should definitely add freshness test:

- name: Check source freshness

run: dbt freshnessFinally, you can generate dbt docs and send them to S3 storage, for example. To do this, simply add the AWS CLI and copy the documentation artifacts to the storage:

- name: Install AWS CLI

run: |

sudo apt update

sudo apt install --assume-yes awscli

- name: Configure credentials for aws CLI

uses: aws-actions/configure-aws-credentials@v2

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: "us-east-1"

# Don't forget to create env variable for S3 bucket name

- name: Upload the manifest.json to S3

run: aws s3 cp ./target/manifest.json s3://${{ env.S3_BUCKET_NAME }}/dbt-docs/manifest.json

- name: Upload the sources.json to S3

run: aws s3 cp ./target/sources.json s3://${{ env.S3_BUCKET_NAME }}/dbt-docs/sources.json

- name: Upload index.html

run: aws s3 cp ./target/index.html s3://${{ env.S3_BUCKET_NAME }}/dbt-docs/index.html

To make dbt docs available through the browser, you will need to configure it in the AWS console. However, this task is typically handled by DevOps or Cloud Engineers.

Build instead of run

Another way to improve your workflow is to consider using the dbt build command instead of dbt run.

The build command simplifies the process by combining the seed, freshness, test, and run stages. However, depending on your specific case, you may still prefer to run each step manually and in a specified order. The choice is ultimately yours.

CI for Pull Requests

Github Actions can be useful not only for running periodic jobs, but also for checking the health of your project during the Pull Request stage.

For example, you can create a CI workflow that checks the quality of new code by running automated checks on each commit. This is similar to pre-commit hooks I described in previous post, but now it's done on the Github side.

The final workflow my look like this (don’t forget to create a new yaml file in .github/workflows folder):

name: dbt CI pipeline

# Triggers on push to main branch

# or Pull Request to any branch

on:

push:

branches:

- main

pull_request:

branches:

- "*"

# Set needed env variables

env:

DBT_PROFILE_TARGET: dev

DBT_PROFILE_USER: ${{ secrets.DBT_PROFILE_USER }}

DBT_PROFILE_PASSWORD: ${{ secrets.DBT_PROFILE_PASSWORD }}

jobs:

ci-tests:

name: dbt run and test CI checks

runs-on: ubuntu-latest

timeout-minutes: 90

steps:

- name: Checkout

uses: actions/checkout@v3

- name: Setup Python environment

uses: actions/setup-python@v4

with:

python-version: "3.11"

- name: Install Python dependencies

run: |

pip install --upgrade pip

pip install -r requirements.txt

- name: Install dbt deps

run: dbt deps

- name: Run seeds

run: dbt seed

- name: Compile queries

run: dbt compile

- name: Run SQL linter

run: sqlfluff lint ./models

# Alternatively, run pre-commit hooks.

# For this step, however, you may

# need to have additional configuration

- name: Run pre-commit hooks

run: pre-commit runNow, every time you add new code to the repository, it will be automatically checked for common mistakes and code formatting principles.

I hope it was useful and you found one or two interesting bits for yourself! 🔥

If you liked this issue please subscribe and share it with your colleagues, this greatly helps me developing this newsletter!

See you next time 👋